Generalized Source-free Domain Adaptation

Abstract

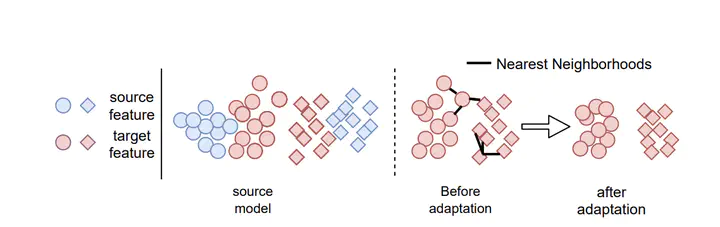

Domain adaptation (DA) aims to transfer the knowledge learned from source domain to an unlabeled target domain. Some recent works tackle source-free domain adaptation (SFDA) where only source pre-trained model is available for adaptation to target domain. However those methods does not consider keeping source performance which is of high practical value in real world application. In this paper, we propose a new domain adaptation paradigm denoted as Generalized Source-free Domain Adaptation (G-SFDA), where the learned model needs to perform well on both target and source domains, with only access to current unlabeled target data during adaptation. First, we propose local structure clustering (LSC), aiming to cluster the target features with its semantically similar neighbors, which successfully adapts the model to target domain in absence of source data. Second, we propose randomly generated domain attention (RGDA), it produces binary domain specific attention to activate different feature channels for different domains, meanwhile the domain attention will be utilized to regularize the gradient during adaptation to keep source information. In the experiments, for target performance our method is on par with or better than existing DA and SFDA methods, specifically achieves state-of-the-art performance (85.4%) on VisDA, and our method works well for all domains after adapting to single or multiple target domains.

ICCV2021. Code:https://github.com/Albert0147/G-SFDA.